Artificial Intelligence

What is Artificial Intelligence?

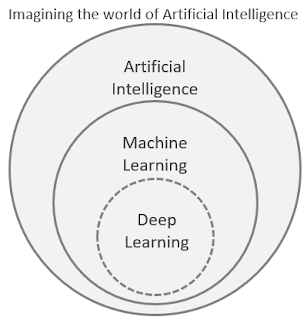

Artificial Intelligence is the broader body of techniques that enable machines to mimic human intelligence. They include the traditional algorithms where logic to perform a task or process is codified upfront. This is automation - the Artificial Intelligence that is hyped these days. E.g. include if-then-else rules, macros used for automating tasks in spreadsheets, software tools and utilities that take a defined action when they see a known input. AI in the early stages was mostly based on rule-based systems, whose ability to deliver value is limited by how well the rules are defined, which requires human expertise.Machine learning is a subset of AI that includes algorithms where the logic to perform a task or process is NOT codified upfront. These algorithms parse data, learn from that data, and make informed decisions based on what it has learned from the data. It includes algorithms where machines use statistical methods to improve at tasks with experience and rely on humans to pre-process data and provide initial intelligence to take action. In Machine Learning once a model is trained, the model can learn further through inferencing, where the ML model is put to work on real-time data. Thus, an element of dynamism is introduced.

Deep learning is a subset of machine learning which uses neural networks to mimic human beings. The logic to perform a task or process is NOT codified upfront. They interpret data features and relationships using neural networks. These algorithms are self-directed for the relevant analysis and train themselves. They adapt and learn autonomously. Thus, an element of autonomy is introduced.

The machine learning domains can be classified as supervised learning, unsupervised learning and reinforcement learning.

Another way to slice and dice machine learning domain is to compare it with human-intelligence. Broadly machine learning will then be classified into - artificial narrow intelligence, artificial general intelligence and artificial super intelligence. We are some what just beginning to scratch the surface of artificial general intelligence.

The AI paradigm

A good way to understand AI is to understand what it does. AI at the end of the day is computer based automation, where computers are programmed to do a certain set of tasks based on algorithms. Often a set of inputs is provided and we expect a set of outputs based on this automation. It is essentially a transformation of inputs into outputs. In the traditional way of automating a task would be to get an expert to tell us the rule (in his or her wisdom) the rules that transform an input into an output. These rules are then encoded into computer code and deployed into the real world. These pieces of computer code can then take inputs from the real world and transform them into outputs based on the rules, that can then be used in the real world.

With the AI paradigm, the creation of rules is "outsourced" to algorithms (machine learning algorithms), which "learn" to create a set of rules (or equations). Machine learning is turning data into numbers and finding patterns in those numbers using algorithms. These algorithms create the rules to find some method to the madness in the input-output data observed in the real world. The algorithms would refine the rules (akin to extracting knowledge from real-world input-output). This is similar to an individual in the real world who learns to become knowledgeable in a domain and who is then referred to as an expert.

These machine learned equations can be complex and usually incomprehensible to the human mind at first glance. These are as good as data used, with an additional layer of the usual programming error risks. It is often not possible to understand the underlying logic and the drivers. Hence, it is often advisable to not use machine learning if you can build a simple rule based system that does not require machine learning.

Why AI is in trend today?

A lot of AI speak these days is due to hype created around automation that has been in existence since the dawn of computing. For example, a macro program written to make a task/ process quick or efficient. This is a mere adaptation of plain old computer programming in our day to day activities. Proliferation of code in our daily machines gives us the sense that they are doing something “intelligent” basis their interaction to their surroundings, when in reality they might be executing a simple algorithm, the old school ways. Your mobile phone sending a message to your connected air conditioner to switch-on and create ambient environment while you are 5 miles away from home is not artificial intelligence. It is an example of connected living or technology-assisted living.The AI implied in the hype, on the other hand, is supposed to have an element of dynamism and autonomy and is in the realm of Machine Learning.

AI has become main-stream today due to four key trends:

- Availability of higher computational power – this has been made possible by the advent of GPUs, better architecture of CPUs and parallel computing which have made processing of large amounts of data computationally cheaper and hence affordable to justify a business case.

- Large amount of data and inability of humans or traditional algorithms to meaningfully mine it – there is commercial need to analyse and process the data to draw useful insights, patterns from data to help grow the business, or to find solutions to problems

- Improvements in algorithms to handle large data - development in algorithms to solve complex problems where process cannot be codified & handle Big or new data efficiently

- Capital investments in AI – the AI solutions have the potential to improve productivity, improve customer experience and thus improve business prospects for companies. With computational power getting cheaper and algorithms improving, it is now possible to justify a business case for AI solutions based on large and complex data.

Adding math to everything around us

Solving some problems could be tricky. In general, anything that involves subjectivity will not give a perfect model. Even if a model is developed (using past data) it will quickly degenerate. The instability in the model and the errors can be demotivating. Subjectivity is introduced by our belief system and our ability to control and channel (disguise) our emotions, or wrongly express emotions (wrong choice of words, grammar) or innovatively express emotions (e.g. sarcasm, slangs). Emotions can be eliminated or reduced when we work in a procedure-driven or rule-based environment. The data generated by such a system will lead to better (with lower losses) and stable models.

Nature (other than human beings) has no emotion or does not control emotion (it is free flowing). At the same time, there are many variables governing the outcomes in nature at any point in time. At some level everything can be translated into mathematical expressions. Hence, ML is best paced to “fit a logic” or “find a pattern” in the data collected from nature. This includes weather forecasts, fire hazard forecasting, environmental changes etc.

Some systems generate data based on the action of large number of humans. Our interest in look at that data is to understand what those large number of humans will do. The presence of large number of humans almost eliminates the “disguise” or “concealment” of emotions. A good example is the stock market data. Our interest is to always know what the market (and not specific) individuals will do next.

Building models on large data (which eliminates biases from emotions/ idiosyncrasies) and then using the model to process an individual’s input will inherently have higher chances of errors. The below are all examples of this. The supervision and validation are done on large datasets (which removes emotional biases/idiosyncrasies). But the models are then used to process individual data points which will have emotional biases, disguise, and idiosyncrasies. The following supervised learning models are examples where this issue is present:

- Natural language processing e.g. chat bots, sentiment analysis, topic classification, text mining (e.g. mining the medical, legal repositories to come up with good suggestions). These are essentially classification problems (supervised learning)

- Image processing e.g. OCR, biometrics, face recognition, X ray images for diagnosis, driverless navigation. These are essentially classification problems (supervised learning)

- Speech recognition which is a supervised learning problem.

In general the following flowchart can be used to understand the efficacy of the model in the context of emotional biases:

Note that in all cases the efficacy is directly impacted by the quality and quantity of data. Everything else equal, the efficacy, stablity, and ability to generalize is better for models that do not involve emotions.